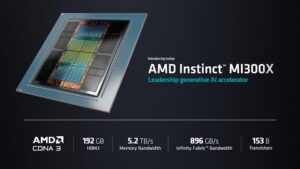

Shares of semiconductor leader Advanced Micro Devices () have surged with the introduction of its new artificial intelligence (AI) accelerator, the MI300, which investors anticipate emerging as a competitive alternative to Nvidia’s () GPUs and become a license to print money for AMD much as the H100 has done for NVIDIA. BackgroundLast January AMD Chairman and CEO Dr. Lisa Su introduced the AMD Instinct MI300 – describing it as the first data center chip that combines a CPU, GPU, and memory on a single package – announcing that it would be launched in the latter half of 2023 and that happened earlier this month.As I mentioned in a previous article posted on TalkMarkets, entitled I wrote that “AMD is hoping its MI300X AI chip can stymie Nvidia’s H100 graphics processing units (GPU) dominance” and Nvidia’s H200 GPU that it introduced today. (See comments below.) Technical informationAMD’s MI300 series of accelerators, introduces two distinct models to cater to diverse market needs: the MI300A which integrates both CPU and GPU capabilities, and the MI300X, an exclusively GPU-based product that gets partnered with 192GB of HBM3 memory, 750W of Thermal Design Power, 8 GPU chiplets and 5.2TB/s of memory bandwidth to address the needs of customers requiring extensive memory capacity for running the most sizable models. I encourage you technical nerds out there to check out for detailed technical information. Marketing PlansAccording to anandtech.com the “MI300 family remains on track to ship at some point later this year and is already sampling potential customers.” Meanwhile, AMD is poised to start shipping the MI300X to cloud providers and OEMs in the next few weeks which positions the MI300X to directly compete with Nvidia’s established data center solutions in the AI training market segment.The introduction of a 192GB GPU by AMD represents a significant development in the context of large language models (LLMs) for AI, where memory capacity often serves as a limiting factor, and is going to be a sizable advantage for AMD in the current market. This advantage, however, hinges on the successful deployment and market reception of the MI300X so the critical factor will be its ability to secure substantial contracts, challenging Nvidia’s dominant 80% market share in this sector. Growth ExpectationsPatrick Moorhead, CEO of Moor Insights & Strategy, projects that the AI accelerator market will potentially reach a total addressable market value of $125B by 2028 while AMD’s Su is even more optimistic seeing the market grow five-fold between now and 2027 and be worth $150B by then. Su projects that AMD’s data center GPU revenue will reach $400M in the fourth quarter of 2023 and escalate beyond $2B in 2024 as a result of, the impact of its MI300 product lineup. ConclusionThe MI300 series of accelerators will give AMD a fresh product to sell into a market that is buying up all the hardware it can get and, as such, there are major expectations that the MI300 series will play a crucial role in the company’s financial performance. In fact, AMD investors are hoping that the MI300 series will become AMD’s license to print money much as the H100 has done for NVIDIA. News Flash!Nvidia announced today, Monday, November 13th, that it plans to bring to market an updated version of the stand-alone H100 accelerator with 141 HBM3E memory, which the company will be calling the H200. It is expected to ship in the second quarter of 2024, and compete with AMD’s MI300X GPU. While the H200 will generate output nearly twice as fast as the H100 given its 141GB of next-generation HBM3 memory it is still considerably slower than the 192 GB of HBM3 memory of AMD’s new MI300X GPU, have less (700W) Thermal Design Power than AMD’s MI300X (750W) and have less memory bandwidth (only 4.8TB/s) than the MI300X (5.2TB/s). More By This Author:

Shares of semiconductor leader Advanced Micro Devices () have surged with the introduction of its new artificial intelligence (AI) accelerator, the MI300, which investors anticipate emerging as a competitive alternative to Nvidia’s () GPUs and become a license to print money for AMD much as the H100 has done for NVIDIA. BackgroundLast January AMD Chairman and CEO Dr. Lisa Su introduced the AMD Instinct MI300 – describing it as the first data center chip that combines a CPU, GPU, and memory on a single package – announcing that it would be launched in the latter half of 2023 and that happened earlier this month.As I mentioned in a previous article posted on TalkMarkets, entitled I wrote that “AMD is hoping its MI300X AI chip can stymie Nvidia’s H100 graphics processing units (GPU) dominance” and Nvidia’s H200 GPU that it introduced today. (See comments below.) Technical informationAMD’s MI300 series of accelerators, introduces two distinct models to cater to diverse market needs: the MI300A which integrates both CPU and GPU capabilities, and the MI300X, an exclusively GPU-based product that gets partnered with 192GB of HBM3 memory, 750W of Thermal Design Power, 8 GPU chiplets and 5.2TB/s of memory bandwidth to address the needs of customers requiring extensive memory capacity for running the most sizable models. I encourage you technical nerds out there to check out for detailed technical information. Marketing PlansAccording to anandtech.com the “MI300 family remains on track to ship at some point later this year and is already sampling potential customers.” Meanwhile, AMD is poised to start shipping the MI300X to cloud providers and OEMs in the next few weeks which positions the MI300X to directly compete with Nvidia’s established data center solutions in the AI training market segment.The introduction of a 192GB GPU by AMD represents a significant development in the context of large language models (LLMs) for AI, where memory capacity often serves as a limiting factor, and is going to be a sizable advantage for AMD in the current market. This advantage, however, hinges on the successful deployment and market reception of the MI300X so the critical factor will be its ability to secure substantial contracts, challenging Nvidia’s dominant 80% market share in this sector. Growth ExpectationsPatrick Moorhead, CEO of Moor Insights & Strategy, projects that the AI accelerator market will potentially reach a total addressable market value of $125B by 2028 while AMD’s Su is even more optimistic seeing the market grow five-fold between now and 2027 and be worth $150B by then. Su projects that AMD’s data center GPU revenue will reach $400M in the fourth quarter of 2023 and escalate beyond $2B in 2024 as a result of, the impact of its MI300 product lineup. ConclusionThe MI300 series of accelerators will give AMD a fresh product to sell into a market that is buying up all the hardware it can get and, as such, there are major expectations that the MI300 series will play a crucial role in the company’s financial performance. In fact, AMD investors are hoping that the MI300 series will become AMD’s license to print money much as the H100 has done for NVIDIA. News Flash!Nvidia announced today, Monday, November 13th, that it plans to bring to market an updated version of the stand-alone H100 accelerator with 141 HBM3E memory, which the company will be calling the H200. It is expected to ship in the second quarter of 2024, and compete with AMD’s MI300X GPU. While the H200 will generate output nearly twice as fast as the H100 given its 141GB of next-generation HBM3 memory it is still considerably slower than the 192 GB of HBM3 memory of AMD’s new MI300X GPU, have less (700W) Thermal Design Power than AMD’s MI300X (750W) and have less memory bandwidth (only 4.8TB/s) than the MI300X (5.2TB/s). More By This Author:

AMD’s New AI Accelerators: A License To Print Money?